Medical Education: Resident

Category: Abstract Submission

Medical Education 7 - Medical Education: Potpourri

156 - Validation of an EPA-based Assessment Tool in a Pediatric Resident Resuscitation Simulation Curriculum

Saturday, April 23, 2022

3:30 PM - 6:00 PM US MT

Poster Number: 156

Publication Number: 156.218

Publication Number: 156.218

Masrur A. Khan, Children's Hospital Los Angeles, Los Angeles, CA, United States; Matthew Baker, Children's Hospital Los Angeles, Los Angeles, CA, United States; Todd P. Chang, Children's Hospital Los Angeles, Los Angeles, CA, United States; Denizhan Akan, USC/Keck School of Medicine/CHLA, Los Angeles, CA, United States

Masrur A. Khan, MD

Resident Physician

Children's Hospital Los Angeles

Los Angeles, California, United States

Presenting Author(s)

Background: Pediatric resuscitation events are infrequent and inherently difficult settings for competency assessment. Simulations provide an opportunity for training in resuscitation, but no standardized assessment tool exists.

Objective: This study aims to assess the validity of a novel evaluation tool, which uses Entrustable Professional Activities (EPAs) as a framework, for competency measurement in a simulation curriculum in a residency training program.

Design/Methods: An EPA-based evaluation tool was adapted and implemented for self and facilitator evaluation in a pediatric residency mock code simulation curriculum (Figure 1). Independent evaluators conducted video review of simulations. Intraclass correlation coefficient (ICC) was determined using a two-way mixed-effects model based on consistency to validate the internal structure of the tool as per Messick's framework.

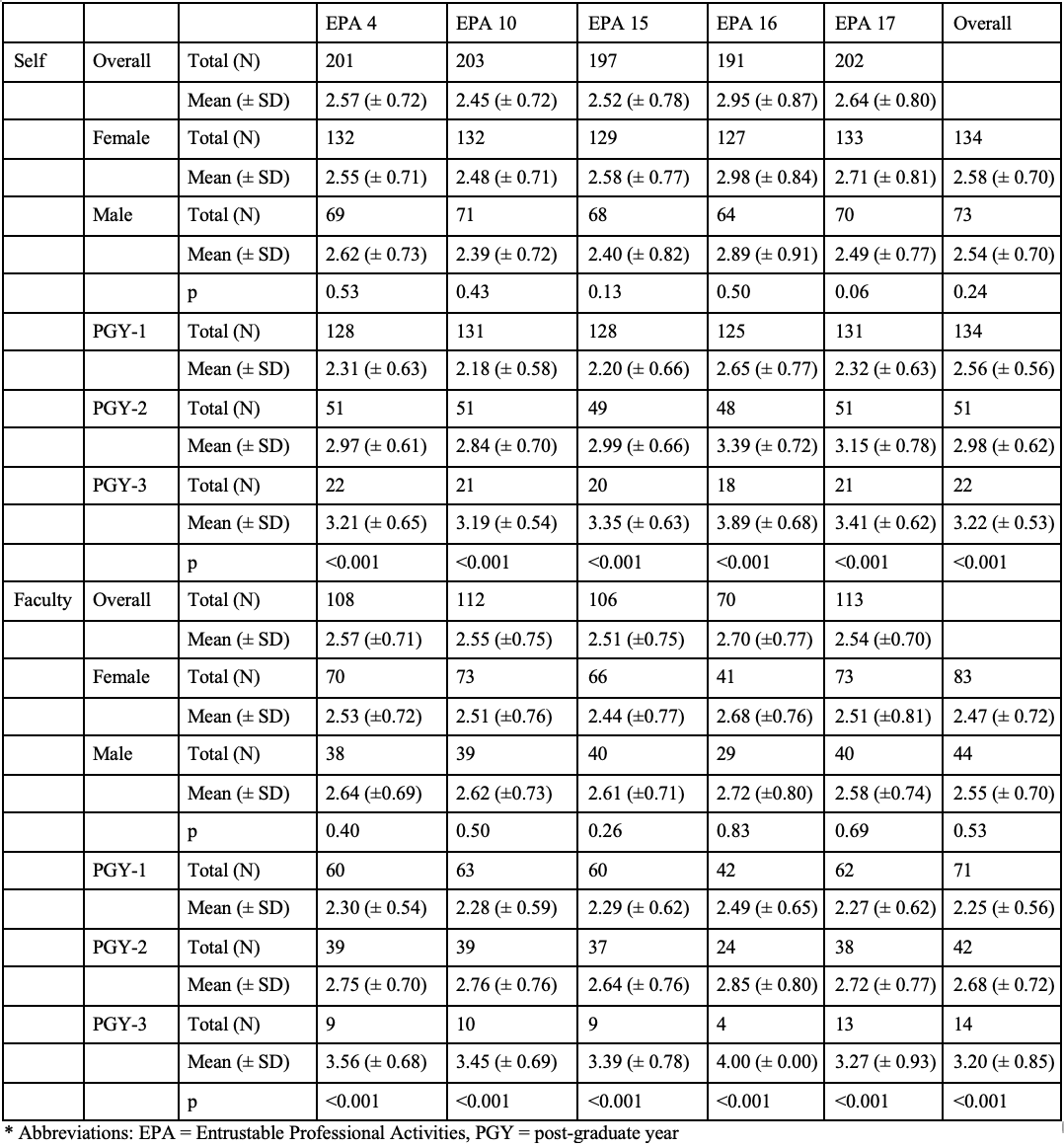

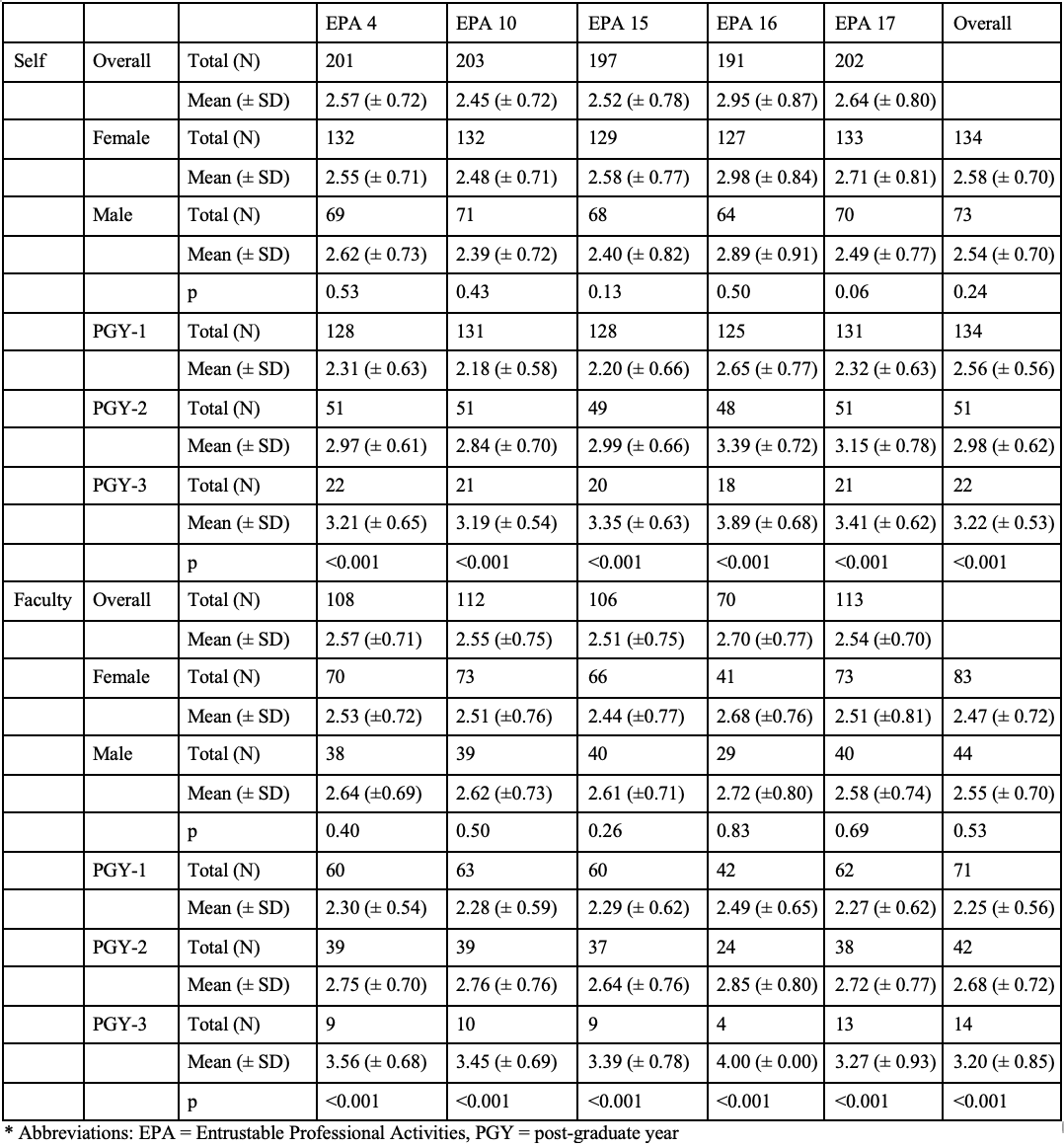

Results: Between July 2019 and March 2021, there were a total of 139 residents in the program, of which 77 (55.4%) submitted at least 1 evaluation; 39 (50.6%) and were postgraduate year (PGY)-1, 22 (28.6%) were PGY-2, and 16 (20.8%) were PGY-3 (Table 1). There were a total of 44 simulations, and a total of 214 evaluations were completed. Of those completed, 140 (65.4%) were by female residents, and 74 (34.6%) were done by male residents. Assessments were completed by 135 (63.1%) PGY-1 residents, 55 (25.7%) PGY-2 residents, and 24 (11.2%) PGY-3 residents. There was no statistically significant difference in self- (2.58 ± 0.70 versus 2.54 ± 0.70, p = 0.24) or faculty-evaluations (2.47 ± 0.72 versus 2.55 ± 0.70, p = 0.53) between female and male residents. However, increasing PGY-level correlated with better scores for both self- and faculty-evaluations (non-parametric: ρ = 0.57, p < 0.001; and ρ = 0.40, p < 0.001, respectively). Upon comparing video recordings for validation, the ICC, via mixed 2-way average measures, was 0.698 (95% CI 0.58–0.79, p < 0.001) with moderate reliability.Conclusion(s): The EPA-based evaluation tool demonstrated consistency amongst gender, simulation setting and scenario, expected progression of competency development based on training level, and independent evaluators using video review. The study results provide supportive evidence that the EPA framework can be extended to the simulation environment in the context of a trainee evaluation tool.

CV.Masrur.Khan.pdf

Table 1 Breakdown of the 5 assessed EPAs (both self and faculty) by sex and PGY status.

Breakdown of the 5 assessed EPAs (both self and faculty) by sex and PGY status.

Objective: This study aims to assess the validity of a novel evaluation tool, which uses Entrustable Professional Activities (EPAs) as a framework, for competency measurement in a simulation curriculum in a residency training program.

Design/Methods: An EPA-based evaluation tool was adapted and implemented for self and facilitator evaluation in a pediatric residency mock code simulation curriculum (Figure 1). Independent evaluators conducted video review of simulations. Intraclass correlation coefficient (ICC) was determined using a two-way mixed-effects model based on consistency to validate the internal structure of the tool as per Messick's framework.

Results: Between July 2019 and March 2021, there were a total of 139 residents in the program, of which 77 (55.4%) submitted at least 1 evaluation; 39 (50.6%) and were postgraduate year (PGY)-1, 22 (28.6%) were PGY-2, and 16 (20.8%) were PGY-3 (Table 1). There were a total of 44 simulations, and a total of 214 evaluations were completed. Of those completed, 140 (65.4%) were by female residents, and 74 (34.6%) were done by male residents. Assessments were completed by 135 (63.1%) PGY-1 residents, 55 (25.7%) PGY-2 residents, and 24 (11.2%) PGY-3 residents. There was no statistically significant difference in self- (2.58 ± 0.70 versus 2.54 ± 0.70, p = 0.24) or faculty-evaluations (2.47 ± 0.72 versus 2.55 ± 0.70, p = 0.53) between female and male residents. However, increasing PGY-level correlated with better scores for both self- and faculty-evaluations (non-parametric: ρ = 0.57, p < 0.001; and ρ = 0.40, p < 0.001, respectively). Upon comparing video recordings for validation, the ICC, via mixed 2-way average measures, was 0.698 (95% CI 0.58–0.79, p < 0.001) with moderate reliability.Conclusion(s): The EPA-based evaluation tool demonstrated consistency amongst gender, simulation setting and scenario, expected progression of competency development based on training level, and independent evaluators using video review. The study results provide supportive evidence that the EPA framework can be extended to the simulation environment in the context of a trainee evaluation tool.

CV.Masrur.Khan.pdf

Table 1

Breakdown of the 5 assessed EPAs (both self and faculty) by sex and PGY status.

Breakdown of the 5 assessed EPAs (both self and faculty) by sex and PGY status.