Medical Education: Fellow

Category: Abstract Submission

Medical Education 3 - Medical Education: Fellow I

577 - A Novel Tool to Assess Subspeciality Fellow Pediatric End of Life Care Skills

Friday, April 22, 2022

6:15 PM - 8:45 PM US MT

Poster Number: 577

Publication Number: 577.117

Publication Number: 577.117

Johannah M. Scheurer, University of Minnesota, Minneapolis, MN, United States; Jeffrey K. Bye, University of Minnesota, Minneapolis, MN, United States; Heidi Kamrath, Children's Hospitals and Clinics of Minnesota, St. Paul, MN, United States; Naomi Goloff, McGill University Faculty of Medicine and Health Sciences, Montreal, PQ, Canada; Sonja J. Meiers, University of Wisconsin-Eau Claire, College of Nursing and Health Sciences, Rochester, MN, United States; Jean Petershack, UT Health San Antonio, San Antonio, TX, United States

.jpg)

Johannah M. Scheurer, MD (she/her/hers)

Assistant Professor

University of Minnesota

Minneapolis, Minnesota, United States

Presenting Author(s)

Background: Assessing pediatric end of life care skills (PECS) and providing high quality, actionable feedback for this difficult and distressing skillset is challenging.

Objective: Locally, subspeciality fellows gain competence in these skills during workshops with a deliberate practice model. This provides an excellent environment to test a novel tool assessing for entrustment the critical elements required for competent pediatric EOL care. We report on analysis of validity evidence from pilot testing of the tool.

Design/Methods: With iterative expert input, we created a learner checklist for use during direct observation based on a gold-standard, Modified Kalamazoo Communication Skills Assessment. It assesses 8 domains and overall competence and provides trainees with formative feedback. For the pilot, trained standardized patients (SPs) and interprofessional facilitators used the tool during 4 workshops from 2019-21. We examined inter-rater reliability among SPs and faculty for the sub-competencies and overall competence with Krippendorff’s alpha for internal structure evidence. We performed quantitative analysis of facilitator agreement about tool use on 3 Likert-type survey items for response process evidence.

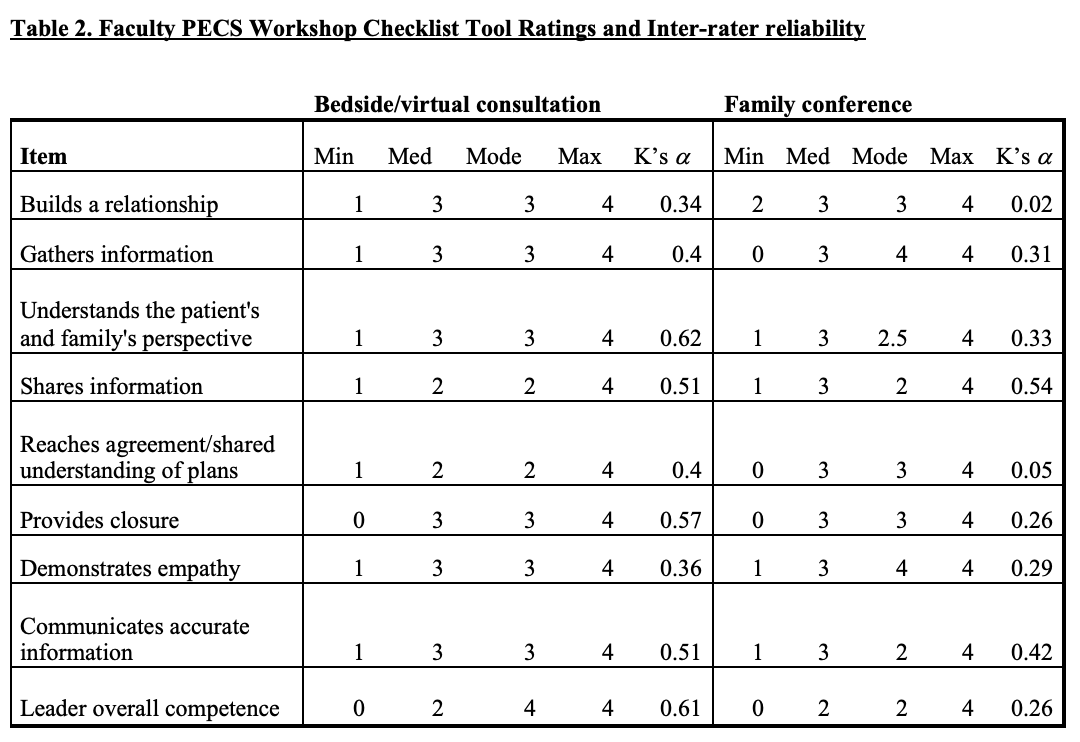

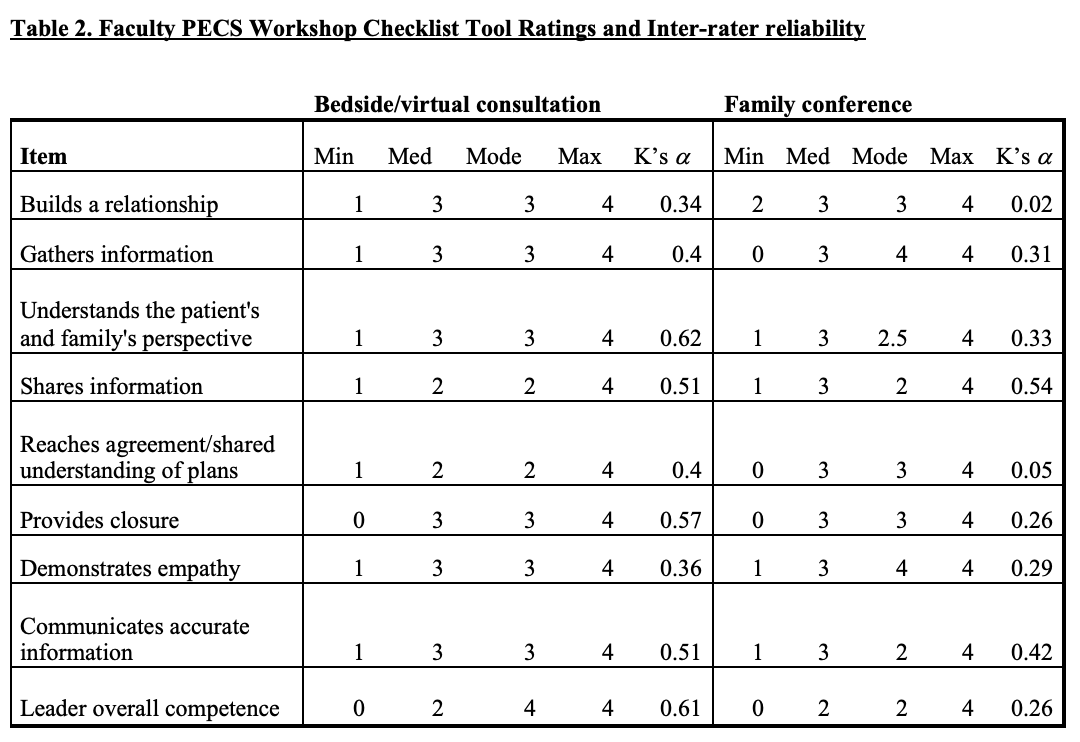

Results: 130 ratings on 31 unique participants were completed. Raters were 8 SPs and 12 facilitators (6 physicians, 2 social workers, 2 chaplains, 2 bereaved parent consultants). For both scenarios inter-rater reliability showed SPs agreed more (Table 1; sub-competency K-alpha 0.19-0.61, Mdn = 0.52; overall competence 0.61, 0.68) than faculty (Table 2; sub-competency K-alpha 0.02-0.62, Mdn = 0.38; overall competence 0.26, 0.61). Faculty respondents (n=10) agreed the checklist was useful in evaluating skills and framing post-encounter feedback (90-100% agreed on each of 3 survey items).Conclusion(s): Early evidence suggests the novel PECS workshop checklist tool is usable and integral for framing formative feedback. Internal structure evidence shows variable inter-rater reliability across the 8 domains and overall competence, informing additional content validity work: further refinement of items and descriptions. We are developing more robust rater training, particularly given our interprofessional faculty of varied backgrounds. Further analysis will include internal consistency, reliability, expert-novice and subspecialty association. The iterative goal is to develop a competency-based tool for entrustment and formative feedback in the clinical learning environment.

Table 1. Standardized Patients PECS Workshop Checklist Tool Ratings and Inter-rater reliability.png) Ratings of subspeciality fellows by 8 standardized patients

Ratings of subspeciality fellows by 8 standardized patients

K's a- Krippendorff’s alpha

Table 2. Faculty PECS Workshop Checklist Tool Ratings and Inter-rater reliability Ratings of subspeciality fellows by 12 interprofessional facilitators

Ratings of subspeciality fellows by 12 interprofessional facilitators

K's a- Krippendorff’s alpha

Objective: Locally, subspeciality fellows gain competence in these skills during workshops with a deliberate practice model. This provides an excellent environment to test a novel tool assessing for entrustment the critical elements required for competent pediatric EOL care. We report on analysis of validity evidence from pilot testing of the tool.

Design/Methods: With iterative expert input, we created a learner checklist for use during direct observation based on a gold-standard, Modified Kalamazoo Communication Skills Assessment. It assesses 8 domains and overall competence and provides trainees with formative feedback. For the pilot, trained standardized patients (SPs) and interprofessional facilitators used the tool during 4 workshops from 2019-21. We examined inter-rater reliability among SPs and faculty for the sub-competencies and overall competence with Krippendorff’s alpha for internal structure evidence. We performed quantitative analysis of facilitator agreement about tool use on 3 Likert-type survey items for response process evidence.

Results: 130 ratings on 31 unique participants were completed. Raters were 8 SPs and 12 facilitators (6 physicians, 2 social workers, 2 chaplains, 2 bereaved parent consultants). For both scenarios inter-rater reliability showed SPs agreed more (Table 1; sub-competency K-alpha 0.19-0.61, Mdn = 0.52; overall competence 0.61, 0.68) than faculty (Table 2; sub-competency K-alpha 0.02-0.62, Mdn = 0.38; overall competence 0.26, 0.61). Faculty respondents (n=10) agreed the checklist was useful in evaluating skills and framing post-encounter feedback (90-100% agreed on each of 3 survey items).Conclusion(s): Early evidence suggests the novel PECS workshop checklist tool is usable and integral for framing formative feedback. Internal structure evidence shows variable inter-rater reliability across the 8 domains and overall competence, informing additional content validity work: further refinement of items and descriptions. We are developing more robust rater training, particularly given our interprofessional faculty of varied backgrounds. Further analysis will include internal consistency, reliability, expert-novice and subspecialty association. The iterative goal is to develop a competency-based tool for entrustment and formative feedback in the clinical learning environment.

Table 1. Standardized Patients PECS Workshop Checklist Tool Ratings and Inter-rater reliability

.png) Ratings of subspeciality fellows by 8 standardized patients

Ratings of subspeciality fellows by 8 standardized patientsK's a- Krippendorff’s alpha

Table 2. Faculty PECS Workshop Checklist Tool Ratings and Inter-rater reliability

Ratings of subspeciality fellows by 12 interprofessional facilitators

Ratings of subspeciality fellows by 12 interprofessional facilitatorsK's a- Krippendorff’s alpha